Using RTDs

This application note shows you how to measure temperature using RTDs connected to the Mosaic 24/7 Data Acquisition Wildcard. As many as three RTDs can be interfaced to a single 24/7 Wildcard, allowing you to measure temperatures with errors of less than ±0.1°C.

What is an RTD?

An RTD (Resistance Temperature Device) is a temperature-sensitive resistor made out of platinum, either as a coil of wire or a thin film, usually encapsulated on a glass or ceramic substrate.

An RTD (Resistance Temperature Device) is a temperature-sensitive resistor made out of platinum, either as a coil of wire or a thin film, usually encapsulated on a glass or ceramic substrate.

Because RTDs are simply resistors, they can be fabricated in all shapes and sizes, or embedded in all sorts of probes. For industrial use, you usually purchase them mounted in probes that include connectors, sheaths, and handles for convenient mechanical placement. But you can also purchase RTDs as simple components, and easily incorporate them into your design.

How do RTDs work?

All metals increase in resistance with temperature, so their resistance change can be used to measure temperature. The resistance of metals is almost directly proportional to absolute temperature, which is a measure of the average density of heat in an object in relation to the absolute zero point at which all molecular motion stops.

Absolute temperature is measured in Kelvin, a unit of equal size to degrees Celsius, but with zero Kelvin representing the absolute zero point, whereas zero degrees Celsius represents the freezing point of water. Absolute temperatures must be specified in Kelvin for calculations, but for the difference between two temperatures, Kelvin and degrees Celsius are interchangeable. Temperatures specified in Kelvin are never negative, and may be translated to and from degrees Celsius by subtracting and adding the absolute temperature of the freezing point of water, 273.15K (alternatively, absolute zero in degrees Celsius at -273.15°C):

0K = -273.15°C

As mentioned above, the electrical resistance of metals is almost directly proportional to absolute temperature, but many metals have physical processes that may distort that proportionality a little. For different temperature ranges different metals are sometimes used, including copper, nickel and platinum. The best is platinum because it has a very high melting point and it doesn't readily corrode. Its resistance is quite linear with temperature; in fact, it is almost perfectly proportional to absolute temperature.

If the proportionality were exact, the temperature coefficient of resistance – defined as the change in resistance per Kelvin or degree Celsius – for a specific sensor constructed with a resistance of 100Ω at 273.15 Kelvin (0°C) would be

This value would only hold for a specific sensor constructed with a wire of this ideal metal in such a shape to have a resistance of 100Ω at 0°C. To generalize to a property of the metal itself rather than a property of this specific sensor, we divide by the resistance value at a specified temperature point – 0°C is the standard point, at which this sensor has a resistance of 100 Ω – to obtain a normalized temperature coefficient of resistance (TCR) α for any sensor constructed with this ideal metal, in units of Ω/Ω/°C:

In fact, the range of nominal TCR values for available platinum RTDs is close to this ideal value. Because the actual temperature coefficient of resistance in metals is not a constant, but varies over temperature, the nominal TCR value for an RTD is specified based on the change in resistance over the more commonly encountered temperature range of 0°C to 100°C, again divided by the resistance at the fixed point of 0°C to generalize to any sensor.

Depending on the alloy and purity of the platinum, the nominal temperature coefficient, α, may range from 0.00375 Ω/Ω/°C on the low end to 0.003927 Ω/Ω/°C for pure platinum. The current international standard for process control RTDs is 0.00385 Ω/Ω/°C, meaning the resistance of a "PT100" RTD is 100Ω at 0°C and 138.5Ω at 100°C:

Thus the PT100 sensor has a nominal linear relationship between temperature and resistance of 0.385 Ω/°C. A standard "PT1000" RTD with a resistance of 1000Ω at 0°C and 1385Ω at 100°C is ten times more sensitive, with resistance nominally changing by 3.85 Ω/°C, but it has the same standard TCR value:

RTDs are most often manufactured to have a resistance of 100Ω at 0°C, but varieties are available with baseline resistances of 200Ω, 1KΩ, 2KΩ, 4KΩ, 10KΩ, and other values.

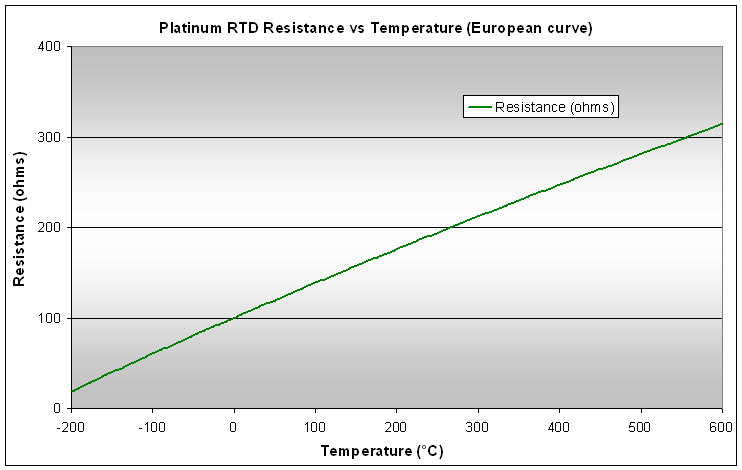

Most RTDs available commercially use the standard TCR value of 0.00385 Ω/Ω/°C, so that their resistance increases from 100 Ω at 0°C to 138.5 Ω at 100°C, with actual intermediate values shown in the following graph. You can see that the response is quite linear, but for precision work the slight curvature of the graph should be taken into account.

RTD resistance vs temperature chart

Data for the above graph is available in an RTD Resistance vs Temperature Table in Excel.

For more information about RTDs

You can find lots more information on RTDs, including accuracy tables and resistance vs temperature tables, at,