Efficient Thermocouple Calibration and Measurement

The potential or voltage of thermocouples varies almost linearly with temperature. However, almost isn't usually good enough – in most microcontroller-based temperature measurement applications the deviations from linearity must be modeled to measure temperature accurately. Consequently, for accurate temperature measurement in your microcontroller project, you will need to apply a nonlinear calibration equation to a measured thermocouple potential to calculate the temperature. This is already done for you if you use the Thermocouple Wildcard's software drivers.

For those of you not using Mosaic's Thermocouple Wildcard, but looking for accurate equations for thermocouple measurement, we hope the thermocouple calibration coefficients on this page are helpful.

NIST publishes a set of polynomials for converting thermocouple voltage to temperature. However, their polynomial curve fits exhibit errors much greater than that of the data fitted. The rational polynomial coefficients provided here produce an order of magnitude lower errors than the NIST ITS-90 thermocouple coefficients for direct and inverse polynomials.

Computing temperature using the NIST polynomial equation

Calibration tables of thermocouple voltage as a function of temperature are available for all common types of thermocouple. A particularly useful source is the National Institute of Standards and Technology (NIST) database of thermocouple values. NIST also provide a polynomial formula you can use to compute temperature from a measured thermocouple voltage. The NIST polynomial equation is calibrated using ten terms, and is of the form,

where the di are calibration coefficients taken from the NIST database, T is the thermocouple temperature (in °C), and V is the thermocouple voltage (in millivolts). The thermocouple voltage is either referred to a cold junction at 0°C, or it is a compensated voltage as though it were referred to a cold junction at 0°C.

Because a single equation doesn't work very well over the full temperature range of a thermocouple, NIST breaks up that range into three or four smaller portions and publishes different sets of calibration coefficients for each sub-range.

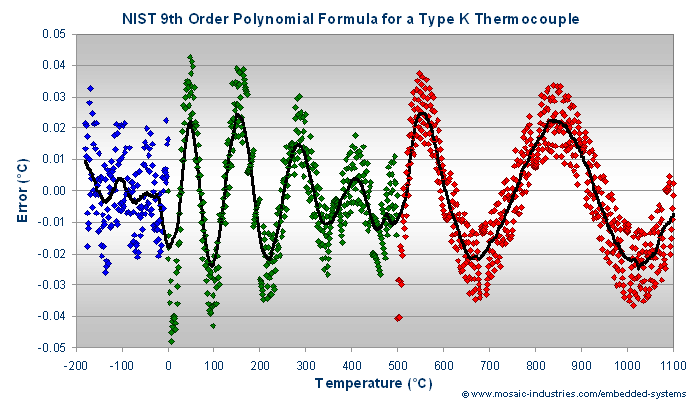

Even so, the NIST polynomial equation doesn't quite compensate for all of a thermocouple's nonlinear response. For example, the following graph shows the temperature errors resulting from applying the NIST formula to the NIST data from a type K thermocouple. The data and calibration equation coefficients are taken from the NIST Thermocouple Database for type K thermocouples.

The measurement errors shown in the graph resulted from applying the polynomial equation to NIST values for the thermocouple voltages to produce a modeled temperature. The difference between the actual temperature and modeled temperature is the calibration error shown. The full temperature range is divided into three smaller ranges (illustrated by the different colored points), and each range is fit using a different set of coefficients.

You can see two sources of variation in the errors:

- For any narrow temperature region there is a vertical band of errors that result from the quantization error in NIST's thermocouple potentials. The thermocouple voltages are reported with one microvolt resolution. Consequently, the rounding error of ±0.5 μV results in a uniformly distributed temperature error of ±0.01°C. You can see this aliasing of the rounding error as Moiré patterns in the graphed errors.

- Superimposed on that rounding error is a much larger residual nonlinearity caused by a lack of perfect fit of the polynomial equation to the data, as shown by the heavier black line in each temperature range. The line was produced by averaging the data over a period comparable to the rounding error's aliasing period.

Unfortunately, the NIST model fails to reduce the systematic errors to levels below the quantization error. An ideal model would reduce the nonlinear residuals to a level well below the quantization error – in that case if your microcontroller is able to measure the thermocouple with better than the 1 microvolt resolution assumed by the NIST calibration table, you will be able to compute temperature with more accuracy than the errors present in the table.

Computing temperature using rational polynomial functions

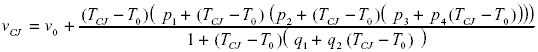

A nearly ideal model can be created by using a rational polynomial function approximation, which uses a ratio of two smaller order polynomials rather than one large order polynomial. We fit the same NIST data for type K thermocouples with a rational function of the following form,

where again T is the thermocouple temperature (in °C), V is the thermocouple voltage (in millivolts), and To, Vo, and the pi and qi are coefficients. The function uses a ratio of two polynomials, P/Q, in this case a fourth order to a third order polynomial. The second form of the equation emphasizes the most efficient order of operations.

The coefficients, To, Vo, pi and qi were found by performing a least squares curve fit to the NIST data.

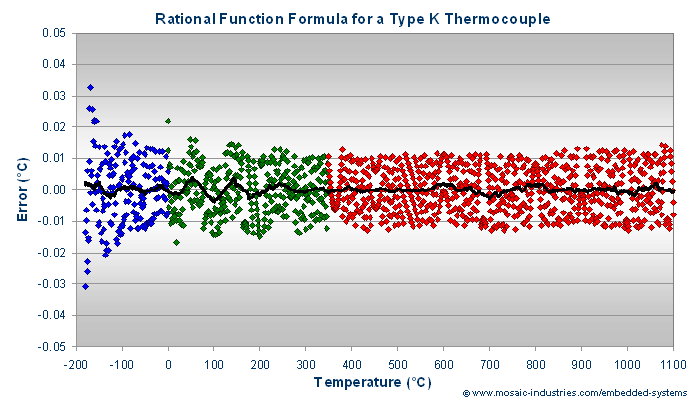

This rational polynomial function uses one fewer coefficient as the NIST polynomial formula and it is computationally faster. Nevertheless, it fits the data more parsimoniously, and it produces much smaller errors, as shown in the following graph.

In the graph the errors between model predictions and the actual temperature are plotted against temperature for a type K thermocouple. From the viewpoint of computational efficiency, this model, using nine coefficients, provides significant less error than the ten-coefficient polynomial provided by NIST.

Most importantly, the systematic nonlinear errors are much smaller than the quantization errors caused by NIST's rounding the voltage data to the nearest microvolt. Most of the scatter in the above graph results from the quantization error – as revealed by the moiré patterns in the scattered points. By smoothing the quantization errors, as shown by the black line in the above graph, we can see that the model's residual errors are about ±0.002°C, much less than the NIST errors of about ±0.03°C.

Over the different types of thermocouples, the NIST models require ten terms in the polynomial to attain a temperature accuracy of ±0.05°C. The computationally more efficient rational function model uses fewer coefficients and provides an accuracy for most thermocouples of a few thousandths of a degree.

Precomputed calibration coefficients for various thermocouples

Using a least squares curve fitting procedure we found calibration coefficients for type B, E, J, K, N, R, S, and T thermocouples. In each case, the full temperature range is broken into several sub-ranges, and a different set of coefficients used for each. The coefficients, fitted data and charts of residual errors may be found in this Excel spreadsheet of thermocouple calibration data.

The following tables provide the rational function coefficients for each type of wire thermocouple. Each table is followed by a graph showing the residual errors. For each thermocouple type most of the residual error results from rounding of the NIST provided voltage values to the nearest microvolt. The rational function approximation interpolates the data well so that computed values of temperature are more accurate than the residuals plots would indicate.

B type thermocouple calibration

E type thermocouple calibration

J type thermocouple calibration

K type thermocouple calibration

N type thermocouple calibration

R type thermocouple calibration

S type thermocouple calibration

T type thermocouple calibration

Computing cold junction voltages

Often, your microcontroller may need to perform computational cold junction compensation. That is, your thermocouple may be terminated at a cold junction temperature other than 0°C. If so, you must measure the temperature of the cold junction using another sensor, perhaps a thermistor. You can then compute a cold junction voltage from that measured temperature, and use it to compensate the thermocouple voltage before converting the thermocouple voltage to a temperature.

In that case you need the inverse transform, that is, an equation for converting temperature into voltage. You don't need a valid equation for a wide temperature range as the cold junction is likely to be placed at ambient temperature. Usually a range of -20 to +70°C is sufficient.

To convert temperature to voltage, you can again use a rational function approximation of the form,

where TCJ is the cold junction temperature, VCJ is the computed cold junction voltage, and the T0, V0, pi and qi are coefficients.

| Table of Coefficients to Convert Voltage to Temperature | ||||

|---|---|---|---|---|

| Type B | Type E | Type J | Type K | |

| Voltage: | -0.003 to 0.011 mV | -1.152 to 4.330 mV | -0.995 to 3.650 mV | -0.778 to 2.851 mV |

| Temperature: | 0 to 70°C | -20 to 70°C | -20 to 70°C | -20 to 70°C |

| Coefficients | ||||

| To | 4.2000000E+01 | 2.5000000E+01 | 2.5000000E+01 | 2.5000000E+01 |

| Vo | 3.3933898E-04 | 1.4950582E+00 | 1.2773432E+00 | 1.0003453E+00 |

| p1 | 2.1196684E-04 | 6.0958443E-02 | 5.1744084E-02 | 4.0514854E-02 |

| p2 | 3.3801250E-06 | -2.7351789E-04 | -5.4138663E-05 | -3.8789638E-05 |

| p3 | -1.4793289E-07 | -1.9130146E-05 | -2.2895769E-06 | -2.8608478E-06 |

| p4 | -3.3571424E-09 | -1.3948840E-08 | -7.7947143E-10 | -9.5367041E-10 |

| q1 | -1.0920410E-02 | -5.2382378E-03 | -1.5173342E-03 | -1.3948675E-03 |

| q2 | -4.9782932E-04 | -3.0970168E-04 | -4.2314514E-05 | -6.7976627E-05 |

| Table of Coefficients to Convert Voltage to Temperature | ||||

|---|---|---|---|---|

| Type N | Type R | Type S | Type T | |

| Voltage: | -0.518 to 1.902 mV | -0.100 to 0.431 mV | -0.103 to 0.433 mV | -0.757 to 2.909 mV |

| Temperature: | -20 to 70°C | -20 to 70°C | -20 to 70°C | -20 to 70°C |

| Coefficients | ||||

| To | 7.0000000E+00 | 2.5000000E+01 | 2.5000000E+01 | 2.5000000E+01 |

| Vo | 1.8210024E-01 | 1.4067016E-01 | 1.4269163E-01 | 9.9198279E-01 |

| p1 | 2.6228256E-02 | 5.9330356E-03 | 5.9829057E-03 | 4.0716564E-02 |

| p2 | -1.5485539E-04 | 2.7736904E-05 | 4.5292259E-06 | 7.1170297E-04 |

| p3 | 2.1366031E-06 | -1.0819644E-06 | -1.3380281E-06 | 6.8782631E-07 |

| p4 | 9.2047105E-10 | -2.3098349E-09 | -2.3742577E-09 | 4.3295061E-11 |

| q1 | -6.4070932E-03 | 2.6146871E-03 | -1.0650446E-03 | 1.6458102E-02 |

| q2 | 8.2161781E-05 | -1.8621487E-04 | -2.2042420E-04 | 0.0000000E+00 |

Again, these coefficients, fitted data and charts of residual errors may be found in this Excel spreadsheet of thermocouple data.

Why aren't rational polynomial functions used more?

For fitting nonlinear data, rational polynomial functions are more flexible, efficient and parsimonious than simple polynomial functions. They fit a lot of nonlinear data better than simple polynomials because for the same number of degrees of freedom (adjustable coefficients) they don't introduce so many wiggles.

So, why aren't they used more? And why did NIST use simple polynomials? NIST settled on 9th order polynomials, which despite their high order don't account well enough for the systematic nonlinearity in the data, instead of using the more parsimonious and accurate rational polynomials. I think they did that because high-order polynomials are easy to curve fit, while rational polynomial functions are not. Or, maybe they just weren't aware of the usefullness of rational polynomial functions at the time.

I suspect that others haven't used rational polynomial functions to fit data much because of the difficulty in doing the curve fits to determine the coefficients. You can use canned packages to do curve fits to polynomials, but not to rational polynomial functions. It's easy to do a least squares fit using a polynomial because there are only zeros, and no poles. The polynomial might go shooting off to infinity, but slowly, outside of the fitted domain, and never within the fitted domain. So, the function interpolates with finite errors, and we usually don't care if it can't be extrapolated beyond the desired domain.

But when fitting quotients of polynomials there are also poles (zeros in the denominator). If those poles occur within the domain of the fit, they cause the function to blow up at the pole, and the blow-up can be very fast (or brittle as the math dudes say, meaning it can go shooting off to infinity in a very small interval of the domain). Even for fairly dense data the blow-up may not be noticed when evaluating the function only at the data points, but then the pole causes terrible errors when the function is evaluated near it. So, there is a potential problem in that if there is a pole within the domain the function can have unbounded error when interpolated near the pole. So the fit must be done in a way to guarantee that the function has no poles withing the domain. When I do the fit I do it so that the denominator can not have a zero within the domain of interest, and after doing the fit I check it to verify that it does not. I provided the Excel spreadsheet here so that people can play with the sheet that actually does the curve fit if they want to learn how to fit other data.